A few recent studies have shown that pairing humans + AI leads to the best results. It outperforms using just AI, and it’s also better than using just humans.

A study that came out this week showed:

“Consultants using AI finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without.” - Ethan Mollick

I highly recommend reading Ethan Mollick’s summary of that study here.

Ok great, so should we just ask everyone to start using GPT4 in their day-to-day?

Not quite.

One problem is that the higher quality the AI is, the harder it is to get humans to still put effort in. So there’s a weird paradox that when combined with humans, sometimes basic AI may perform better than advanced AI. For example:

In really great driverless cars humans may literally fall asleep at the wheel, with potentially deadly consequences.

In law, I think there’s a risk of this with the automated AI drafting solutions, if these get better, will lawyers stop ‘checking’ the suggestions by these tools?

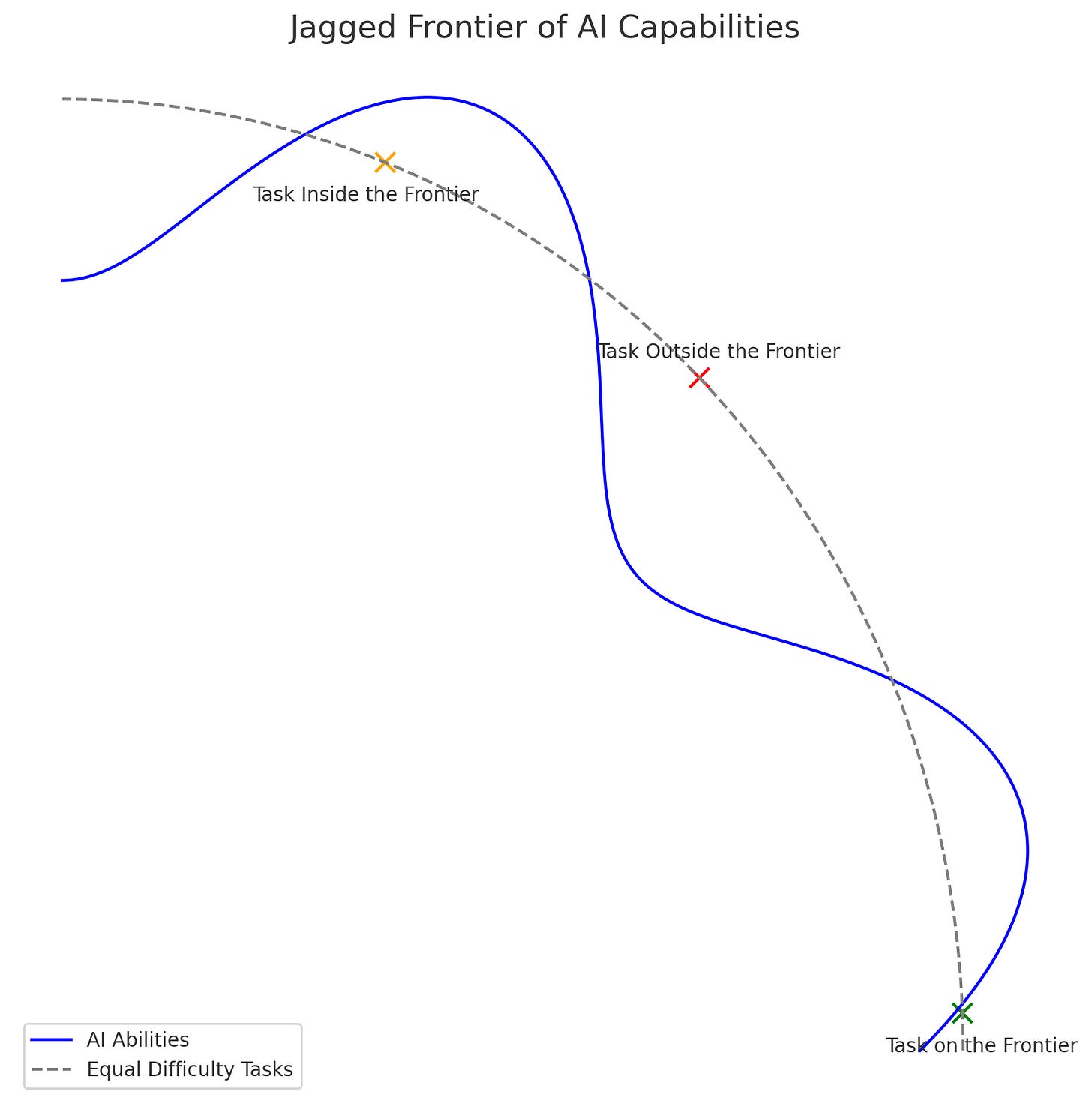

A separate problem is that it’s not 100% clear in which areas we can trust AI and where we can’t. This is called the “Jagged Frontier” of AI. The boundary of what AI can still do well, and what’s just out of it’s reach is unknown and doesn’t seem to follow our intuitions.

The ideal set-up

This means the best set-up is one where:

humans are still involved

humans have enough tasks to not get bored and fall asleep at the wheel

the human tasks should be the ones which AI is most likely to get wrong.

Centaurs and Cyborgs

In his article, Ethan describes that the best results came from consultants who were able to gain the benefits of the AI without the downsides. This requires a very conscious approach.

These consultants followed either the centaur approach or the cyborg approach.

Centaur approach

The centaur approach is to have a crisp and strategic delineation between which bit is done by the human and which bit by the AI.

The split is informed by what AI is known to be particularly good or bad at. E.g., I find that AI is good at coming up with article ideas, but not quite so good at writing in my tone of voice. So I often ask GPT to come up with 10 article ideas. But then I pick one and then do 100% of the writing myself.

Cyborg approach

The cyborg approach is where human and AI jointly work on a task, they are blended, not separate.

For example, we’re currently testing the cyborg approach in our contract playbook tool (LexPlay). The lawyer will provide the playbook heading, a few sentences around their preferred positions and typical fallbacks, and the tool will then use that to create a super detailed and expansive first draft of the playbook entry. The lawyer can then make many tweaks before finalising it.

Our tests show that the quality using this blended approach is higher than if either lawyers or AI did 100% of the work.

Note: if you’re a lawyer and want to beta test the AI playbook builder, hit reply to this email, or get in touch with me on LinkedIn here!

In any case, it makes me believe that the idea of wholesale replacing humans with AI is not the logical next step. It’s figuring out how to combine the two, to get the best out of both.

Thanks for being here,

Daniel